Introduction

Running a crisis drill feels productive. You gather the team, walk through scenarios, check boxes on your compliance list. But here's the uncomfortable truth: most organizations have no idea if their drills actually predict real-world performance. They measure attendance and completion rates while ignoring the metric that matters most when a power outage hits or a cyberattack locks down systems: response time.

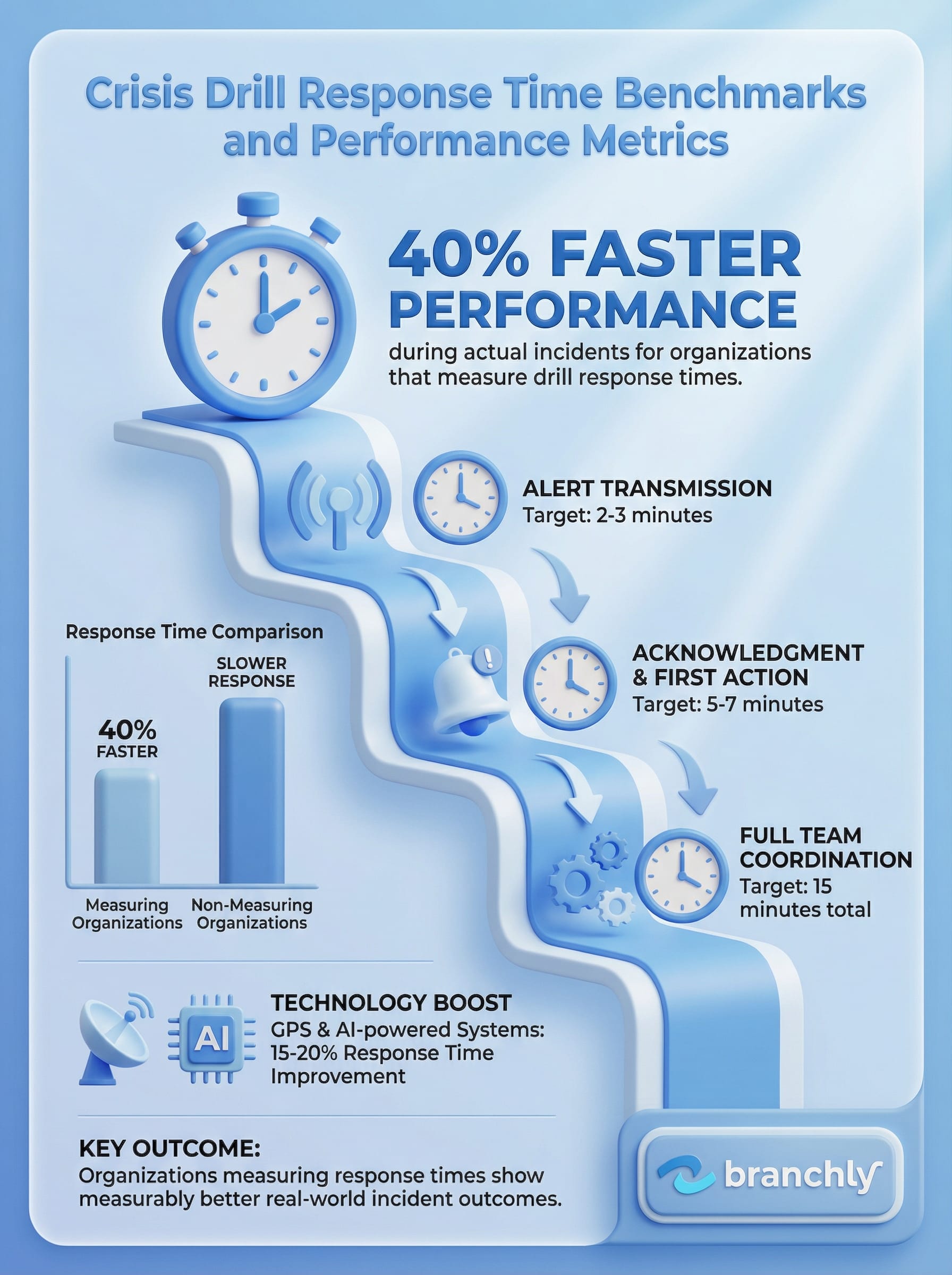

Response time isn't just about speed. It's a diagnostic tool that reveals coordination gaps, communication breakdowns, and decision-making bottlenecks that only surface under pressure. Organizations that track response times during drills improve their real incident performance by 40%. Those that don't? They discover their weaknesses when customers are watching and regulators are asking questions.

What Response Time Actually Measures

Response time in crisis drills tracks the interval from incident declaration to the start of appropriate action. That sounds simple, but it captures multiple failure points: notification delays, decision paralysis, unclear role assignments, and coordination friction across locations.

For multi-location organizations, response time breaks into measurable components. Alert transmission time measures how long it takes to notify all relevant personnel. Acknowledgment time tracks how quickly team members confirm receipt and availability. Activation time captures the gap between acknowledgment and beginning execution of the playbook. Each stage reveals different organizational capabilities.

Emergency services use response time as their primary performance indicator. Fire departments benchmark the interval from distress call to scene arrival. Urban ambulance services target 7-minute average response times, achieving 88% of responses within 10 minutes in metropolitan areas. Your organization faces different constraints, but the principle holds: time is the measure that correlates with outcomes.

Quick Baseline Test

Send an unannounced test alert to your crisis team right now. Measure: 1) Time until 50% acknowledge, 2) Time until 100% acknowledge, 3) Time until first person accesses the response plan. Most organizations are surprised by all three numbers.

Industry Benchmarks That Matter for Multi-Location Operations

Setting realistic targets requires understanding both industry standards and your operational context. Urban emergency services average 7-8 minutes for critical responses. Rural operations face longer timelines, often exceeding 15 minutes due to geographic dispersion. Multi-location businesses fall somewhere in between, depending on their communication infrastructure and team distribution.

For business continuity scenarios, reasonable benchmarks look different than emergency medical response. Initial alert acknowledgment should happen within 2-3 minutes for critical incidents. First substantive action (accessing playbooks, initiating protocols) should start within 5-7 minutes. Full team coordination for a significant incident should be underway within 15 minutes.

Context matters enormously. After-hours incidents naturally take longer than business-hour responses. A credit union experiencing a core banking system outage at 2 PM on Tuesday should mobilize faster than the same incident at 11 PM on Saturday. Your benchmarks need to account for these variables rather than applying a single standard across all scenarios.

The 40% Improvement Gap

Organizations conducting regular tabletop exercises with measured response times show 40% faster performance during actual incidents compared to those that drill without metrics. The act of measurement itself drives improvement.

Traffic patterns affect real-world response just as they affect ambulance times. Peak hours can add 10 minutes to physical response needs. Weather degrades performance by 1-3 minutes on average. Major public events or mass casualty situations can increase response times by 25% or more due to resource constraints and access limitations.

What Slows You Down: Common Bottlenecks Revealed by Drill Metrics

Slow alert transmission usually indicates technical problems, not human ones. If it takes 5+ minutes to notify all team members, you're dealing with system issues: outdated contact lists, failed delivery through primary channels, or reliance on single notification methods. GPS tracking and AI-powered dispatch systems reduce emergency service response times by 15-20%. The same technology applies to business crisis management.

Long gaps between notification and acknowledgment point to different issues. People aren't seeing alerts because they're in meetings, notifications are getting buried in noise, or the urgency level isn't clear. This problem intensifies during off-hours when team members aren't monitoring work channels actively.

The acknowledgment-to-action gap reveals the most insidious problem: people confirm they got the message but don't know what to do next. They can't find the playbook. They're unclear on their role. They're waiting for someone else to take the lead. This is where response falls apart, and it's the bottleneck that poor crisis planning creates.

Red Flag Times

Alert-to-acknowledgment over 5 minutes during business hours signals serious problems. Acknowledgment-to-action over 10 minutes means your playbooks aren't accessible or clear enough. Track both separately.

Decision-making delays compound as incidents escalate. If your protocol requires approval from unavailable executives before taking basic protective actions, you've built delay into your system. The best response plans pre-authorize specific actions for specific scenarios, eliminating approval bottlenecks for time-sensitive decisions.

How to Structure Drills That Produce Useful Data

Tabletop exercises work well for testing decision-making logic, but they don't produce accurate response time data. People mentally skip steps they'd struggle with in reality. They assume information would be available that actually takes time to gather. For timing benchmarks, you need higher-fidelity simulations.

Functional exercises test specific capabilities in real-time without fully mobilizing operations. Send the actual alert through actual channels. Require team members to access actual playbooks and confirm actual tasks. Don't simulate these steps. The friction you encounter during functional exercises is the friction you'll face during real incidents.

Full-scale drills provide the richest data but demand significant resources. A regional bank running a core system outage drill across 40 branches learns where coordination breaks down, which locations have outdated procedures, and which staff need additional training. These exercises are expensive, but discovering gaps during drills costs less than discovering them during actual outages.

The Flu Drill Payoff

Australia conducted pandemic simulation exercises months before H1N1 emerged. When the real outbreak hit, their practiced response protocols led to efficient containment efforts and reduced economic impact compared to unprepared nations.

Unannounced drills produce the most realistic timing data. Announced exercises give people time to prepare, find passwords, review procedures. That's valuable for training, but it doesn't measure actual readiness. Mix both types: regular announced drills for skill development, occasional unannounced tests for honest assessment.

Vary your timing deliberately. Test after-hours response. Run drills during known busy periods. Schedule exercises during shift changes. Each scenario reveals different vulnerabilities. A franchise network that only drills during Tuesday afternoons has no idea how they'd perform during a Saturday dinner rush equipment failure.

Beyond Speed: Other Metrics That Predict Real Performance

Task completion rates matter as much as speed. A team that mobilizes in 3 minutes but skips critical steps hasn't actually performed well. Track what percentage of required actions get completed during drills. Look for patterns in what gets missed. Safety checks often get shortcut under time pressure. Communication to customers gets delayed while teams focus on technical fixes.

Role clarity surfaces through drill observation. If multiple people try to take charge or everyone waits for direction, your org chart doesn't match your crisis structure. Strong crisis response requires clear command hierarchy that differs from normal operations. The branch manager who runs daily operations may not be the right person to manage facility evacuation.

Communication effectiveness shows up in information accuracy and flow. Did the right people get the right information at the right time? Or did critical updates get lost while irrelevant details circulated widely? Post-drill surveys asking participants to rate clarity and usefulness of communications reveal gaps that timing data alone misses.

The Confidence Question

After each drill, ask participants: On a scale of 1-10, how confident are you in our ability to handle this scenario for real? Research shows this subjective measure strongly predicts actual performance. Scores below 7 indicate serious problems.

Documentation compliance becomes critical for regulated industries. Credit unions and banks need tamper-resistant logs showing who did what when. During drills, test whether your systems actually capture required information. A financial institution discovered during a drill that their incident logging system didn't timestamp entries, creating potential audit exposure they could fix before regulators noticed.

Inter-location coordination poses unique challenges for multi-site operations. Measure how long it takes for Location A to notify Location B of relevant developments. Track whether centralized teams maintain visibility into what's happening at distributed sites. A hotel chain running a drill across 20 properties found that their regional command center lost track of six locations that went silent after initial check-in.

Using Drill Data to Drive Continuous Improvement

After-action reports need quantitative anchors, not just qualitative observations. Compare this drill's response time to previous drills for the same scenario. Track whether times improve with each iteration. If they don't, your training isn't working or your procedures have fundamental problems.

Identify your slowest locations or teams and understand why. Sometimes it's staffing levels. Sometimes it's local leadership. Sometimes it's poor understanding of procedures. Each root cause requires different remediation. Treating all performance gaps the same way wastes resources and leaves some problems unsolved.

Playbook refinement should happen after every drill. Did people skip steps because those steps added no value? Remove them. Did people get confused at decision points? Clarify them. Did teams need information that wasn't readily available? Fix access or pre-position that data. Static playbooks that never change based on drill findings become outdated fast.

Technology Impact

Upgrading 911 infrastructure with GPS tracking and AI dispatch reduces emergency response times by 15-20%. Business crisis management sees similar gains from modern platforms that automate notification, provide one-click playbook access, and track response in real-time.

Trend analysis over time reveals whether your organization is getting better or just getting comfortable with drills. Plot your key metrics across quarters. Look for plateaus where improvement stops. Investigate whether that represents genuine capability ceiling or training fatigue where teams are going through motions without engagement.

Share results transparently with leadership and frontline teams. When a restaurant franchise shares drill performance data across locations, it creates healthy competition and peer learning. High-performing locations can mentor struggling ones. Executive teams see where to invest in additional training or system improvements.

The Compliance Dimension: What Regulators Want to See

Financial institutions face explicit requirements for testing business continuity plans. NCUA, FFIEC, and FINRA don't just want to see that you conducted drills. They want evidence that drills test actual capabilities and that you address identified deficiencies. Response time data provides objective evidence of preparedness that narrative reports can't match.

FINRA Rule 4370 requires broker-dealers to test business continuity plans annually at minimum, with senior management approval of results and documented remediation of gaps. When Robinhood failed to maintain adequate continuity systems during outages, their $57 million fine sent a clear message: regulators expect functional testing, not paper exercises.

Documentation standards require specific detail. Timestamp every action during drills. Record who participated and who didn't. Log what worked and what failed. Capture decisions made and rationale behind them. During audits, examiners ask to see this documentation. Organizations with detailed drill records demonstrate good-faith compliance efforts even if performance isn't perfect.

Audit-Ready Drill Reports

Your drill documentation should answer five questions: 1) What scenario did you test? 2) Who participated? 3) What were the measurable results? 4) What gaps did you identify? 5) What corrective actions did you take? If you can't answer all five, your documentation is incomplete.

Board-level reporting transforms drill data from operational metrics to governance tools. Executives need to see trends, understand risks, and approve resource allocation for improvements. A credit union board seeing consistent 12-minute response times when the target is 8 minutes can make informed decisions about technology investments or staffing changes.

Third-party validation adds credibility. Some organizations bring in external consultants to observe drills and provide independent assessment. Others benchmark against industry peers through shared exercises or data exchanges. External perspective helps overcome internal blind spots and organizational complacency.

Making Benchmarks Actionable: What to Do with Your Numbers

Start by establishing your baseline. Run the same drill scenario three times over three months and average the results. That's your current capability. Don't compare yourself to ideal standards yet. First, understand where you actually are.

Set incremental improvement targets. If your current average response time is 18 minutes, don't immediately target 8 minutes. Aim for 15 minutes next quarter. Sustained incremental improvement beats sporadic heroic efforts. Organizations that improve response times by 10-15% per year through consistent attention outperform those that focus intensely for one quarter then ignore the issue.

Address your biggest bottleneck first. If notification takes 8 minutes and everything else is fast, fix notification. If decisions get stuck waiting for approval, change your approval process. Spreading effort across multiple small problems dilutes impact. Solve the one thing that slows you down most.

Technology upgrades often provide the fastest improvement. Moving from phone trees to automated multi-channel notification can cut 5 minutes off response time immediately. Replacing PDF playbooks scattered across shared drives with centralized digital access eliminates the 3-minute searching delay. These aren't expensive enterprise systems. Modern crisis management platforms designed for mid-market organizations cost less than a single serious incident.

The Cost-Benefit Reality

A 5-minute improvement in response time during a revenue-impacting incident saves approximately $8,000 per hour for a $50M revenue organization. The platform investment pays for itself in one or two incidents.

Training focus should shift based on data. If acknowledgment times are good but task completion is poor, people need procedure training, not notification training. If leadership decisions cause delays, executives need tabletop practice, not frontline staff drills. Match your training investment to your performance gaps.

Celebrate improvements publicly. When a location cuts response time from 15 minutes to 9 minutes, recognize that achievement. When the organization hits a response time target across all sites, mark that milestone. People improve what gets measured and recognized. Ignore the metrics and they'll ignore improvement.

Summary

Response time benchmarks transform crisis drills from compliance checkboxes into diagnostic tools that reveal real organizational capabilities. Organizations that measure, track, and improve response times during drills perform 40% better during actual incidents. But speed alone doesn't guarantee effectiveness. The most valuable drill programs measure response time alongside task completion, role clarity, communication effectiveness, and documentation compliance. Start by establishing your baseline through repeated testing of the same scenarios. Identify your specific bottlenecks through detailed timing of each response stage. Set realistic improvement targets based on your operational context rather than aspirational industry standards. Address your largest delays first through targeted technology upgrades, process refinements, or training interventions. Most importantly, close the loop by using drill data to refine playbooks, adjust procedures, and allocate resources. Drills without measurement provide false confidence. Measurement without action wastes effort. Together, they build the response capability that protects your organization when minutes matter.

Key Things to Remember

- ✓Organizations conducting regular drills with measured response times show 40% faster performance during actual incidents compared to those that drill without metrics.

- ✓Response time breaks into measurable stages: alert transmission, acknowledgment, and activation. Each reveals different organizational capabilities and bottlenecks.

- ✓Reasonable business continuity benchmarks: 2-3 minutes for alert acknowledgment, 5-7 minutes for first substantive action, 15 minutes for full team coordination.

- ✓The acknowledgment-to-action gap reveals the most critical failure point: people confirm receipt but don't know what to do next because playbooks aren't accessible or clear.

- ✓Unannounced drills at varied times produce the most realistic data. Mix announced exercises for training with unannounced tests for honest assessment of readiness.

How Branchly Can Help

Branchly automatically tracks response time at every stage of your crisis drills and real incidents. Our Command Center timestamps alert transmission, acknowledgment, playbook access, and task completion across all locations. You see exactly where delays occur and which teams need additional support. Pre-approved playbooks load instantly for any scenario, eliminating the searching delay that adds minutes to response. Real-time dashboards give you visibility into every branch's status, so coordination gaps surface immediately rather than after the drill ends. Our intelligence layer analyzes every exercise to identify bottlenecks, suggest workflow improvements, and track performance trends over time. The system generates audit-ready documentation automatically, with tamper-resistant logs that satisfy NCUA, FFIEC, and FINRA requirements. Most importantly, Branchly helps you close the loop from measurement to action by highlighting specific procedural changes that will reduce your response time based on your actual performance data.

Citations & References

- [1]

- [2]The Practice Plan: Why Intention and Evaluation Matter in Crisis Management Exercises - Bryghtpath bryghtpath.com View source ↗

- [3]The role of emergency preparedness exercises in the response to a mass casualty terrorist incident: A mixed methods study - PMC nih.gov View source ↗

- [4]

- [5]

- [6]Evaluation of emergency drills effectiveness by center of disease prevention and control staff in Heilongjiang Province, China: an empirical study using the logistic-ISM model - PMC nih.gov View source ↗